What led to facebook, twitter’s decision to suspend Ugandan accounts?

Wednesday January 13 2021

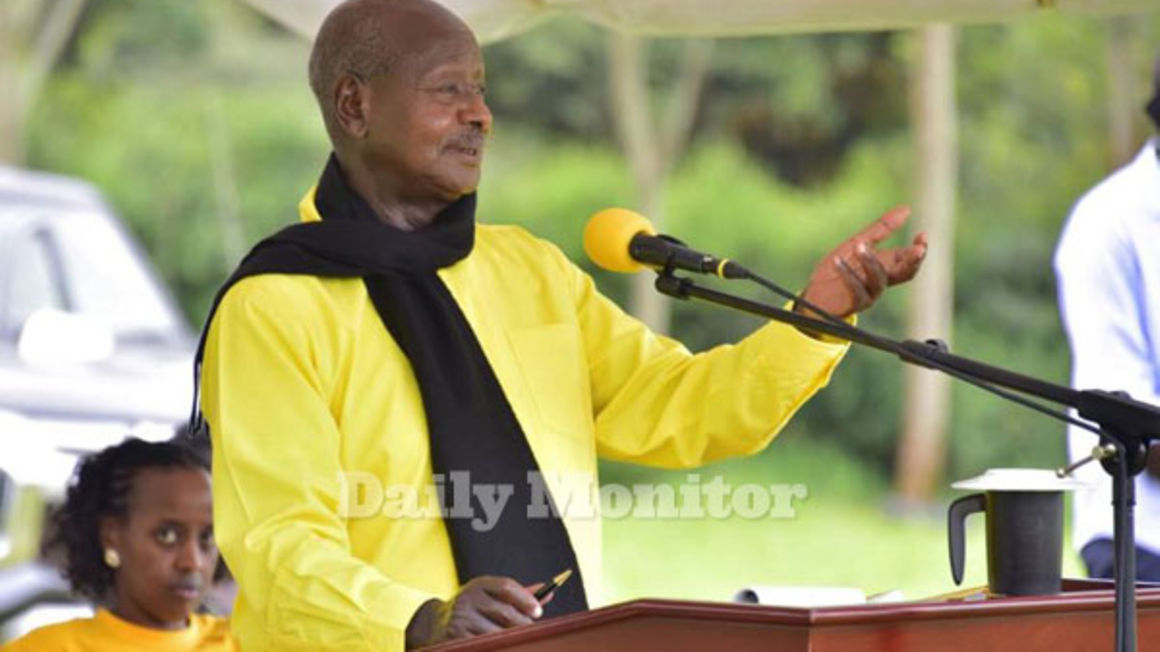

President Yoweri Museveni has retaliated by blocking social media platforms in this elections period (PHOTO: KELVIN ATUHAIRE)

On Friday, the social media platform, Facebook suspended a number of Ugandan accounts that were closely connected to the ruling National Resistance Movement (NRM) party. Twitter, another social media platform, followed suit and by Sunday had already suspended a few accounts that pushed for the re-election of President Yoweri Kaguta Museveni.

In his Presidential address on Tuesday, Museveni expressed his anger towards the tech giants while defending the decision to ban social media use during the 2021 general elections saying that that kind of behaviour is unacceptable.

“There is no way anybody can come and play around with our country. To decide who is good...who is bad? We cannot accept that,’ President Museveni said.

The decision to ban the use of social media during the elections period has been met with mixed reactions from all over the globe with most Ugandans opposing it for the inconvenience it has caused while many outside the Pearl believe it serves the tech giants right for interfering in the countries’ elections.

But what inspired Facebook and Twitter’s decision to suspend NRM leaning accounts?

Digital forensic analysts from the Digital Forensic Research Lab (DFRLab) carried out an investigation on disinformation connected to the 2021 general elections. DFRLab’s research has been instrumental in the shut down and suspension of social media accounts from users all over the world in the last few months.

Tessa Knight, a research assistant at the DFRLab told South African News channel Newzroom Afrika that what caught their attention were old/outdated images that were being used to smear National Unity Platform’s (NUP) presidential flag bearer, Robert Kagulanyi.

“I noticed that there was a network of accounts spreading false images from protests of 2011, 2013 saying that this is evidence of Bobi Wine’s supporters being hooligans,” Knight said.

According to the DFRLab report, the network of social media users that included public relations firms, news organisations and inauthentic social media accounts also spammed information praising NRM’s flag bearer, Yoweri Museveni.

“The accounts responded quickly to negative comments about Museveni, using the same copied and pasted text,” the DFRlab report reads.

The accounts used the same copied and pasted text to reply people.

DFRLab then forwarded their suspicions to facebook and twitter. Facebook did their own research on the DFRLab’s claims.

Facebook concluded that the accounts were involved in what it terms as Coordinated Inauthentic Behaviour (CIB). Facebook would later release a statement attributing this network to the Ministry for Information, Communications and Technology (ICT).

“We found this network to be linked to the Government Citizens Interaction Center at the Ministry of Information and Communications Technology in Uganda,” the statement from Facebook read.

Facebook would later shut down accounts including those of Kampala Times and Robusto Communications.

“RobustoUg posted a combination of articles by Kampala Times and information that looked like news stories tagged #KampalaTimes, although the page only started sharing posts by Kampala Times on July 2 2020. Posts that did not follow this format were primarily copied and pasted from other Ugandan websites without accreditation,” the report reads.

What exactly is Coordinated Inauthentic Behaviour?

In facebook’s community standards, CIB is defined as the use of multiple Facebook or Instagram assets, working in concert to engage in Inauthentic Behavior, where the use of fake accounts is central to the operation.

Inauthentic behaviour simply means misrepresenting yourself on social media platforms. This may include using fake accounts, artificially boosting the popularity of content, or engaging in behavior designed to enable other violations under Facebook’s Community Standards.

In DFRLab’s report on the 2021 elections campaigns, Seven of the 10 accounts that were suspected to be involved in disinformation did not contain any identifiable information.

“Their banner images and profile pictures were lifted from sites such as Pinterest and they posted no personal information about the account operators,” the report reads.

This raised a red flag for facebook whose guidelines and community standards do not allow individuals misrepresenting themselves. The platform claims that with these guidelines, they intend to create a space where individuals can trust the people and communities they interact with.

“Per our normal reporting process, we will share more details about the networks we removed this month in our January CIB report, which we’ll release at the beginning of February,” the facebook statement reads.